AI's WEIRD Bias, Bubbles (or not), the Role of Chaos in our Brains & More

System Change newsletter, 14 September 2025

Hello, this is Sherif and it's the first System Change newsletter. Every week or two we'll share a selection of things that made us stop and think. Articles, research, expert analysis and podcasts. You will find an AI lean to the newsletter but also topics across a broad swath of science and technology.

If you're a subscriber, please share it with friends and colleagues who might enjoy it. If you're not, what are you waiting for?

AI's WEIRD Bias

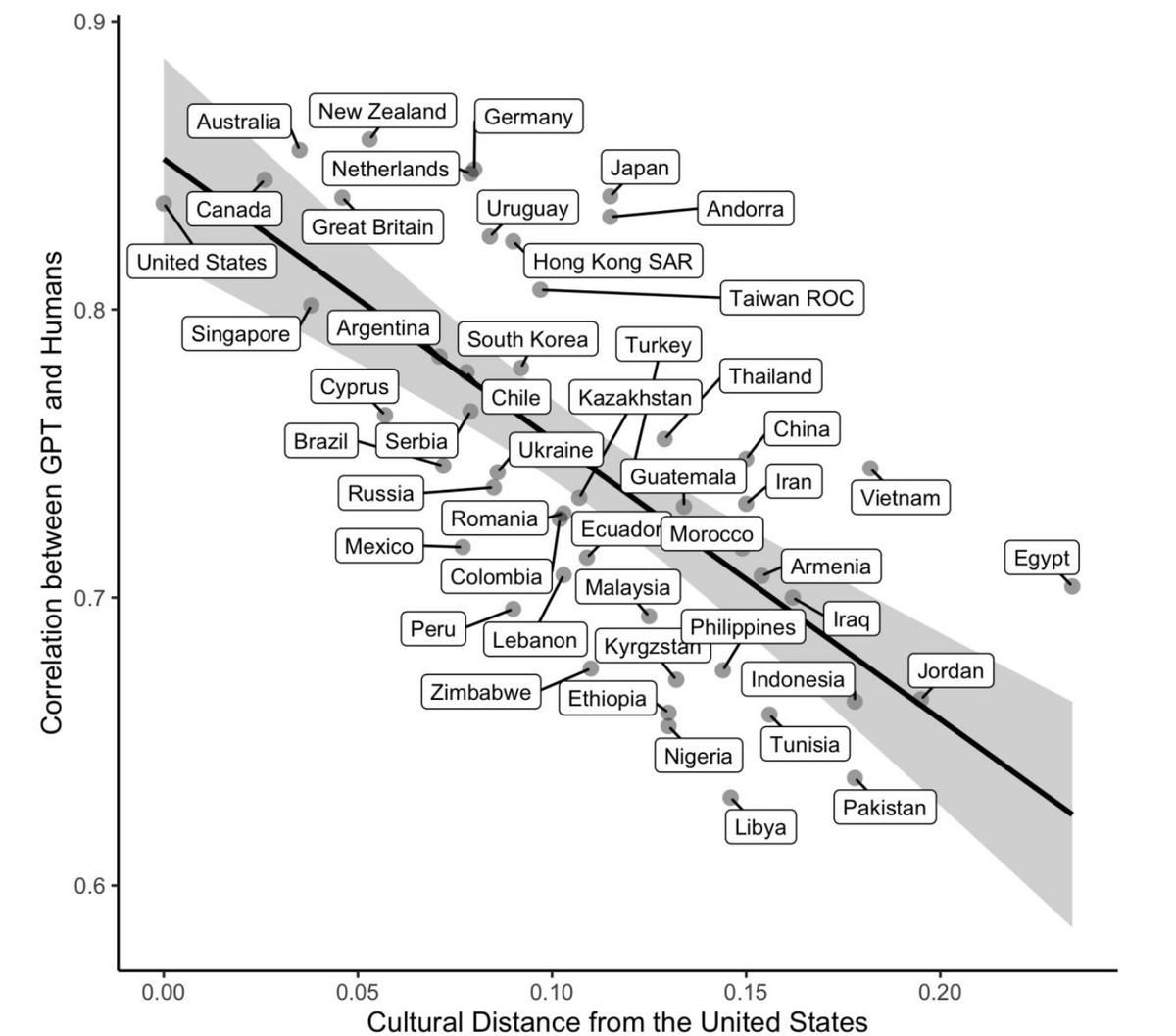

Harvard researchers Mohammad Atari and Joseph Henrich are part of a research team that revealed that large language models exhibit strong WEIRD (Western, Educated, Industrialized, Rich, Democratic) bias, with AI responses correlating strongly with cultural distance from the United States. Using comprehensive cross-cultural data from the World Values Survey spanning 65 nations and 94,278 individuals, the study shows LLMs are closest to populations like the US, Canada, Australia, New Zealand and the UK (note - all English speaking) and farthest from countries like Egypt, Jordan and Pakistan. The research challenges AI researchers' claims about "human-level performance," arguing that "WEIRD in, WEIRD out" represents a significant limitation as most of the world's population is not WEIRD, yet AI training data overwhelmingly comes from WEIRD sources.

Here is the paper for a deeper dive.

AI Bubble or Not?

Oracle's $300 Billion OpenAI Deal

Oracle secured a five-year, $300 billion commitment from OpenAI beginning in 2027, requiring 4.5 gigawatts of power, enough to supply four million homes. The deal catapulted Oracle's stock by over 40% in a single day, briefly making Larry Ellison the world's richest person with a $100 billion gain as Oracle's market cap approached $1 trillion. Oracle is pivoting from software to become an "AI grid" provider, with CEO Safra Catz projecting cloud infrastructure revenue to hit $144 billion by 2030, supported by nuclear-powered data centers and $35 billion in capital expenditures. The transformation positions Oracle as critical AI infrastructure, with success measured in "megawatts rather than features."

It's worth noting that OpenAI's costs are rising very fast. The company recently revised its spending projections to $115 billion through 2029, up from $80 billion. And it's losing a lot of money on ChatGPT. T

On the other hand, Pierre-Carl Langlais argues why we're not in a generative AI bubble

Pierre-Carl, co-founder of French AI startup Pleias argues against the AI bubble narrative, noting the actual market remains relatively small at $20-30 billion by late 2024. He contends that lab valuations aren't speculative (citing Anthropic's standard 18x multiple) and that the "DeepSeek effect" revealed training costs are actually much lower than expected, often in the low millions rather than billions. Langlais highlights that we're seeing genuine breakthroughs in AI reasoning capabilities, particularly in mathematics and logical operations, with models now able to "meta-think about solution strategies" and handle complex spatial logic. He views current progress as building toward significant vertical industry impacts by 2026-2027, suggesting the market has room for legitimate growth rather than bubble-driven speculation.

The AI market is here to stay and has plenty of room to grow, but it doesn't necessarily mean there is no investment or valuation bubble.

Things are Heating up in the AI / Copyright War

Anthropic reached a precedent setting $1.5 billion copyright settlement with authors and publishers, paying $3,000 per work for approximately 500,000 copyrighted books allegedly pirated from sites like Library Genesis to train Claude AI models. The settlement represents the largest publicly reported copyright recovery in history and establishes important precedents: it only covers past use (not future conduct), doesn't protect against claims of infringing AI outputs, and requires destruction of pirated material libraries within 30 days. The $3,000 per-work figure is expected to become a benchmark for similar AI copyright cases, emphasising the critical importance of legal compliance in AI training data sourcing. Ropes & Gray have a fantastic analysis if you want to dive deeper.

This was just one battle, there is plenty more to come.

Switzerland Launches its Public Open Source AI Model

Switzerland launched Apertus, a fully open-source multilingual large language model developed by EPFL, ETH Zurich, and the Swiss National Supercomputing Centre, representing "one of the largest of its kind" and trained on 15 trillion tokens across over 1,000 languages. Unlike proprietary models, Apertus provides complete transparency with open access to model weights, training datasets, documentation, and intermediate checkpoints under a permissive commercial license.

If like me, you prefer long listens over long reads, head over to The EPFL AI Center's latest podcast with its co-director Marcel Salathe, where they discuss Apertus, how they tackled compliance and boosted low-resource languages.

Public AI models are not a substitute for commercial models but they are very important for a healthy and competitive AI ecosystem. Let's hope more countries follow suit.

The Crucial Role of Chaos in Brain Functions

The cover story of New Scientist's September 6 issue (paywall) was about our brain's constant balancing act, treading a delicate line between order and disorder.

Simply telling someone your name is a small miracle for electrical signals zapping across a 1.3-kilogram lump of jelly. “You’re pulling off one of the most complicated and exquisite acts of computation in the universe,” says Keith Hengen, a biologist at Washington University in St Louis.

Exactly how we achieve this complexity has puzzled philosophers and neuroscientists for centuries, and now it seems precision isn’t the answer. Instead it could all come down to the brain’s inherent messiness.

Researchers like Hengen call this idea the critical brain hypothesis. According to them, our grey matter lies near a tipping point between order and disorder that they call the “critical zone”, or – more poetically – the “edge of chaos”. We can see the same kind of instability in avalanches and the spread of forest fires, where seemingly small events can have large knock-on consequences.

This concept is gaining traction and changing our understanding of intelligence, consciousness, and creativity. Studies suggest the brain exists in a "self-organized critical state" at the edge of chaos, allowing rapid switching between mental states to respond to changing environmental conditions. This chaos-order balance appears fundamental to the brain's most extraordinary cognitive functions and could inspire more powerful AI systems.

And To Finish, The News You've All Been Waiting For

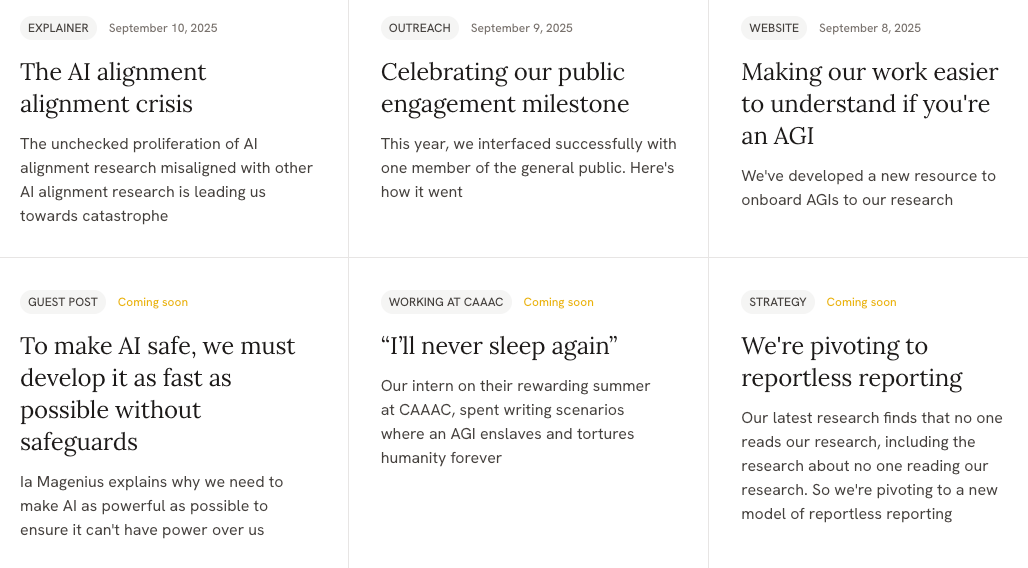

Last week marked the historic launch of The Center for the Alignment of AI Alignment Centers. The Center asks the crucial question: "Who aligns the aligners?" The Center describes itself as "the world's first AI alignment alignment center, working to subsume the countless other AI centers, institutes, labs, initiatives and forums into one final AI center singularity". Exciting stuff!

Here's a snapshot of some of their groundbreaking research

*because sarcasm is dead, I need to point out that CAAAC is a parody.

And that's it for now. If you enjoyed this newsletter, please share with your friends and colleagues and tell them to subscribe.